Hackathon & me

Machine Learning Hackathons serve as a recreational activity, for me. And until last night, my last indulgence — sometime around 2021; pre-COVID-19 era, on the Zindi Platform. Half the intent is to acknowledge that I've recently taken up DSN and Microsoft Skills for Job as a pastime with an underlying research angle, and so far I've come to enjoy the entire process all over again. It's thrilling to see how minor adjustments can move one up the leaderboard. Self-gratification and then, possibly, the inevitable shakedown as other similarly skilled data scientists improve on their model.

Basically, one's position on the leaderboard reflects only on some subset of the whole dataset provided by the organizers until end of the competition. Hence, shakedown. There are other technicalities (for instance, "overfitting to the holdout") to it but that would be a bore. Read this paper if you have an interest in the shenanigan that can be the leaderboard.

Every ML competition takes on the same format: Objective, Timeline, Data (partially or fully provided, depending on the learning; typically partitioned), Evaluation method, Prize(ranging from points to $$, even jobs), and RULES. And this one is no different:

Objective

The objective of this hackathon is to create a powerful and accurate predictive model that can estimate the prices of houses in Nigeria. By leveraging the provided dataset, you will analyze various factors that impact house prices, identify meaningful patterns, and build a model that can generate reliable price predictions. The ultimate goal is to provide Wazobia Real Estate Limited with an effective tool to make informed pricing decisions and enhance their competitiveness in the market.Timeline

This challenge starts on 12 June at 13:00 PM. Competition closes on 29 July at midnight....Rules

If your solution places 1st, 2nd, or 3rd on the final leaderboard, you will be required to submit your winning solution code to us for verification, and you thereby agree to assign all worldwide rights of copyright in and to such winning solution to Zindi.

Every other detail can be seen here

The publicity of this notebook is to ensure participants can derive an idea or two from my implementation and perhaps get into the top 3%.

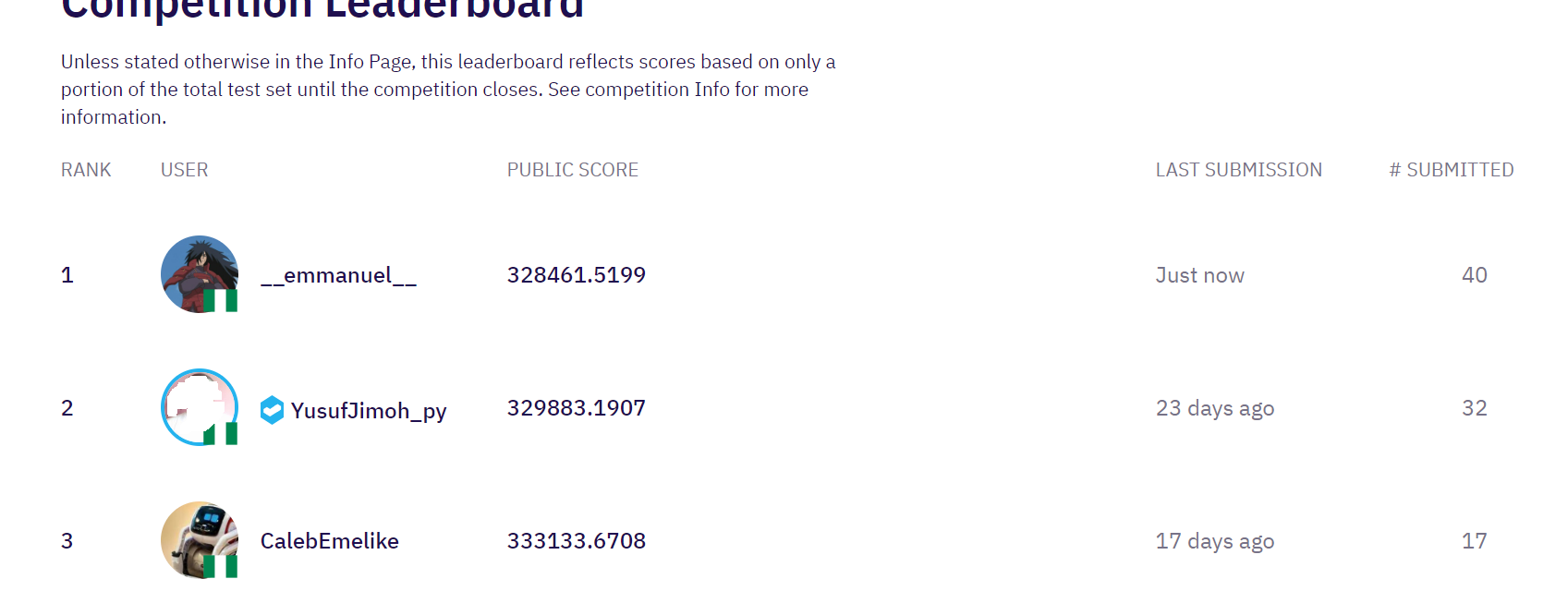

I presently sit in 1st 2nd position with a much more similar approach.

screenshot of zindi leaderboard

Random seed is used mainly for reproducibility. It is an arbitary number that ensures the results seen in this notebook can be easily reproduced when ran on another machine, as long as correctly included where necessary.

seed = 42 #the meaning of life

#import computational/visualizational packages

import matplotlib

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import seaborn as sns

%matplotlib inline

#import provided data

submission = pd.read_csv('Sample_submission.csv')

train = pd.read_csv('Housing_dataset_train.csv')

test = pd.read_csv('Housing_dataset_test.csv')

train.shape, test.shape, test.shape[0] == submission.shape[0]

train.head(2)

Pandas.DataFrame.sample essentially returns a random sample of items from an axis of object. The line of code below is to show you that while 'sample' is a probablistic distribution, setting a random_state makes it a unifirm distribution. That is, the same result as be obtained over and over again.

test.sample(3, random_state=seed) #random_state set

test.sample(3, random_state=seed) #random_state set to same seed for reproducibility

def check_nan_percentage(df):

"""a simple function that returns sum of NaNs in every column"""

return df.isna().mean()*100

check_nan_percentage(train)

check_nan_percentage(test)

Having a non-null test dataset as seen above leaves possible imputation for the train dataset - saving a bit of time in the process. Let's focus on our train dataset.

sns.pairplot(train)

train['price'].hist(bins=50, figsize=(15,6))

plt.show()

train['price'].describe()

train.loc[:, 'price']= np.log1p(train['price'])

train['price'].head()

train['price'].hist(bins=50, figsize=(15,8))

plt.show()

#check if ID is unique and follows no pattern.

pd.Series(train['ID']).is_unique

train['ID'].hist(bins=30, figsize=(15,7))

train[train['ID'] == 3583]

train['ID'].describe()

train.loc[:, 'ID'] = np.log1p(train['ID'])

test.loc[:, 'ID'] = np.log1p(test['ID'])

test['ID'].describe()

sns.histplot(train.loc[:, 'ID'])

sns.histplot(test.loc[:, 'ID'])

sns.pairplot(train, hue='bedroom')

sns.barplot(data=train, x='title', y='bedroom')

sns.barplot(data=train, x='title', y='bathroom')

sns.barplot(data=train, x='title', y='parking_space')

sns.pairplot(train)

sns.boxplot(data=train, y='price')

X = train.dropna().drop(['loc', 'title', 'price'], axis=1)

y = train.dropna()['price']

#get outliners/inliers

from sklearn.linear_model import RANSACRegressor, LinearRegression

ransacor = RANSACRegressor(LinearRegression(), min_samples=60, loss='squared_error', residual_threshold=5.0, random_state=seed)

ransacor.fit(X, y)

inliers = ransacor.inlier_mask_

outliers = np.logical_not(inliers) #inverted-inliers

X[inliers]

X[outliers]

train = train.dropna()

q75 = train['price'].quantile(0.75)

q25 = train['price'].quantile(0.25)

iqr = q75-q25

high, low = q75 + (1.5 * iqr), q25 - (1.5 * iqr) #my sweet chariotttt

high, low

train[train['price'] > high].shape

train[train['price'] < low].shape

sns.histplot(train[train['price'] < high]['price'])

train = train[train['price'] < high]

train = train[train['price'] > low]

sns.pairplot(train)

#check correlation

corrmat= train.corr()

corrmat["price"].sort_values()

plt.figure(figsize=(15,7))

sns.heatmap(corrmat,annot=True,center=0)

sns.boxplot(data=train, y='price')

check_nan_percentage(train)

train['title'].value_counts()

k = train[['title', 'bedroom', 'bathroom']].groupby('title')

k.mean()

k.agg([np.sum, np.mean, np.std])

#group dataset by title to check mean housing price

train.groupby(['title'])['price'].mean().reset_index()

train.groupby(['bedroom'])['bathroom'].median().reset_index()

train.groupby(['title', 'bedroom'])['price'].mean().reset_index()

train['title'].value_counts()

train[(train['title'] == 'Townhouse') & (train['bedroom'] == 9)].head()

train.groupby(['loc'])['price'].mean().reset_index()

train.groupby(['loc'])['bedroom'].std().reset_index().head()

train.groupby(['ID'])['bedroom'].mean().reset_index().head()

train[(train['ID'] == 0)]

sns.scatterplot(data=train, x='price', y='ID', hue='bedroom')

train['ID'].min(), train['ID'].max(), test['ID'].min(), test['ID'].max()

q1 = train['ID'].quantile(0.25)

q3 = train['ID'].quantile(0.75)

qr = q3 - q1

high = q3 + 1.5 * qr

low = q1 - 1.5 * qr

low, high

train[~(train['ID'] < low) | (train['ID'] > high)].corr(), train[(train['ID'] < low) | (train['ID'] > high)].shape

sns.scatterplot(data=train[~(train['ID'] < low) | (train['ID'] > high)], x='price', y='ID', hue='bedroom')

train = train[~(train['ID'] < low) | (train['ID'] > high)]

train.head(2)

sns.boxplot(x=train['ID'])

train[train['title'] == 'Mansion']

print(train.loc[(train['title'] == 'Detached duplex')]['bedroom'].mode())

print(train.loc[(train['title'] == 'Bungalow')]['bedroom'].mode())

print(train.loc[(train['title'] == 'Cottage')]['bedroom'].mode())

mansion_bed1 = train.loc[(train['title'] == 'Mansion') & (train['bedroom'] == 1)]

mansion_bed1.head(), mansion_bed1.shape

#check correlation when mansions with weirdly 1 bedroom are dropped

corrmat= train.drop(mansion_bed1.index.to_list(), axis=0).corr()

corrmat["price"].sort_values()

plt.figure(figsize=(15,7))

sns.heatmap(corrmat,annot=True,center=0)

train = train.drop(mansion_bed1.index.to_list(), axis=0)

train.head()

# train[['bedroom', 'bathroom', 'parking_space']] = train[['bedroom', 'bathroom', 'parking_space']].fillna(0)

# c = train.copy()

# c[['bedroom', 'bathroom', 'parking_space']] = c[['bedroom', 'bathroom', 'parking_space']].fillna(0)

# c

train.dropna(inplace=True)

train.reset_index(drop=True, inplace=True)

train

build_types = train['title'].unique()

build_types

#check house title stat by bedroom

def check_median_house(build_types: list, df):

medians = []

for i, build_type in enumerate(build_types):

medians.append(df.loc[(train['title'] == f'{build_type}')]['bedroom'].median())

print(build_type, medians[i])

return medians

def check_mode_house(build_types: list, df):

for build_type in build_types:

print(build_type, df.loc[(train['title'] == f'{build_type}')]['bedroom'].mode())

return

# data = {

# 'build_types': build_types,

# 'df': train

# }

# medians = check_median_house(**data)

# print('=======')

# check_mode_house(**data)

check_nan_percentage(train)

train.describe().T

train.describe(include='O').T

def feature_eng(df):

df['bed_per_bath'] = df['bedroom']/(df['bathroom'])

df['bed_per_park'] = df['bedroom']/df['parking_space']

df['allrooms'] = df['bedroom'] + df['bathroom'] + 1

df['IDbath'] = df['ID'] * df['bathroom']

df['IDbed'] = df['ID'] * df['bedroom']

return df.sample(5)

train_locprice_std = train.groupby('loc')['price'].std().astype(np.float16)

train_titleprice_std = train.groupby('title')['price'].std().astype(np.float16)

train_bedprice_std = train.groupby('bedroom')['price'].std().astype(np.float16)

#shameful naming

train['x'] = train['loc'].map(train_locprice_std)

train['y'] = train['title'].map(train_titleprice_std)

train['z'] = train['bedroom'].map(train_bedprice_std)

train.head()

train.corr()['price']

test['x'] = test['loc'].map(train_locprice_std)

test['y'] = test['title'].map(train_titleprice_std)

test['z'] = test['bedroom'].map(train_bedprice_std)

test.head()

#combine train and test dataset

train['marker'] = 'train'

test['marker'] = 'test'

combo = pd.concat([train, test], axis=0)

combo.head()

feature_eng(combo)

corrmat= combo[combo['marker'] == 'train'].corr()

corrmat["price"].sort_values()

plt.figure(figsize=(15,7))

sns.heatmap(corrmat,annot=True,center=0)

bed_median = np.median(combo['bedroom'])

# #ofbathroom >= medianbed (accounts for #ofbed==#ofbath): privacy ensured - plus rows where bath was entered for bed - rectified

combo.loc[(combo['bathroom'] > (combo['bedroom']-(bed_median-1)))]

combo.loc[(combo['bathroom'] > (combo['bedroom']-(bed_median-1))), 'privacy'] = 1

combo.loc[~(combo['bathroom'] > (combo['bedroom']-(bed_median-1))), 'privacy'] = 0

combo.loc[:, 'privacy'] = combo['privacy'].astype('category')

combo.loc[:, 'privacy'] = combo['privacy'].cat.codes

combo.sample(5, random_state=seed)

combo[combo['marker'] == 'train'].corr()['price']

#same tecchnique as above; if sufficient parking space then big area (other wise okay/low), likely shared

combo.loc[(combo['parking_space'] >= (combo['bedroom']-(bed_median-1)))]

combo.loc[(combo['parking_space'] > (combo['bedroom']-(bed_median-1))), 'space_area'] = 1

combo.loc[~(combo['parking_space'] > (combo['bedroom']-(bed_median-1))), 'space_area'] = 0

combo.loc[:, 'space_area'] = combo['space_area'].astype('category')

combo.loc[:, 'space_area'] = combo['space_area'].cat.codes

#using cat.codes here, as high privacy/space_area is more valued

combo[combo['marker'] == 'train'].corr()

combo.drop(['IDbath', 'IDbed'], axis=1, inplace=True)

combo['loc'].unique()

def group_by_zone(state=None):

#group state by region

zones = {

'North Central': ['benue', 'kogi', 'kwara', 'nasarawa', 'niger', 'plateau', 'fct'],

'North East': ['adamawa', 'bauchi', 'borno', 'gombe', 'taraba', 'yobe'],

'North West': ['jigawa', 'kaduna', 'kano', 'katsina', 'kebbi', 'sokoto', 'zamfara'],

'South East': ['abia', 'anambra', 'ebonyi', 'enugu', 'imo'],

'South South': ['akwa ibom', 'bayelsa', 'cross river', 'delta', 'edo', 'rivers'],

'South West': ['ekiti', 'lagos', 'ogun', 'ondo', 'osun', 'oyo']

}

for key, values in zones.items():

if state in values:

return key

continue

return None

group_by_zone('ekiti'),group_by_zone('lagos'),group_by_zone('adamawa')

combo['loc'] = combo['loc'].str.lower()

combo.head(2)

combo['zone'] = combo['loc'].apply(group_by_zone)

combo[['loc', 'zone']].head()

combo.head(3)

combo.info()

cat_cols = combo.loc[:, combo.dtypes == 'object'].columns

cat_cols = list(cat_cols)

cat_cols

cat_cols.remove('marker')

cat_cols

set(combo.columns)

num_cols_ = set(combo.columns) - set(cat_cols)

num_cols_ = list(num_cols_)

num_cols_

num_cols_.remove('marker')

num_cols_.remove('price')

#num_cols_.remove('ID')

# _num_cols_ = ['parking_space', 'allrooms', 'bed_per_bath','IDbed',

# 'privacy',

# 'space_area',

# 'bed_per_park',

# 'bathroom',

# 'IDbath',

# 'bedroom',

# ]

# # cat_cols =

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import OneHotEncoder, StandardScaler

from sklearn.compose import ColumnTransformer

cat_pipeline = Pipeline(

steps=[(

'one_hot', OneHotEncoder(handle_unknown='ignore', sparse=False)

)]

)

num_pipeline = Pipeline(

steps=[(

'st_scaler', StandardScaler()

)]

)

columns_transformed = ColumnTransformer(transformers=[

('num_pipe', num_pipeline, num_cols_),

('cat_pipe', cat_pipeline, cat_cols)], n_jobs=-1

)

#short transformer

columns_transformed

_train = combo[combo['marker'] == 'train']

_test = combo[combo['marker'] == 'test']

_train.shape, _test.shape

cols_to_use = cat_cols + num_cols_

# cols_to_use.remove('ID')

cols_to_use

X = _train[cols_to_use]

y = _train['price']

y.head(3)

# #uncomment to save

# y_ = pd.DataFrame(y, columns=['price'])

# y_.to_csv('processed_trainY.csv')

from sklearn.model_selection import train_test_split as TTS

#seed implemented

Xtrain, Xtest, ytrain, ytest = TTS(X, y, test_size=.2, random_state=seed)

Xtrain.head(2)

_Xtrain = columns_transformed.fit_transform(Xtrain)

_Xtest = columns_transformed.transform(Xtest)

_test = columns_transformed.transform(_test[cols_to_use])

_Xtrain.shape, _Xtest.shape, _test.shape

#store the column names after transformation

cols_transformed_names = list(columns_transformed.get_feature_names_out())

Xtrain = pd.DataFrame(_Xtrain, columns=cols_transformed_names)

Xtest = pd.DataFrame(_Xtest, columns=cols_transformed_names)

test_df = pd.DataFrame(_test, columns=cols_transformed_names)

Xtrain.columns

# from sklearn.cluster import KMeans

# cluster_cols = ['cat_pipe__zone_South South', 'num_pipe__bedroom', 'num_pipe__allrooms']

# kmeans = KMeans(n_clusters=5, random_state=seed, n_init='auto').fit(Xtrain[cluster_cols])

# Xtrain['cluster'] = kmeans.predict(Xtrain[cluster_cols])

# Xtest['cluster'] = kmeans.predict(Xtest[cluster_cols])

# test_df['cluster'] = kmeans.predict(test_df[cluster_cols])

# sns.scatterplot(x=Xtrain['cluster'], y=ytrain)

# Xtrain['cluster'] = Xtrain['cluster'].astype('category')

# Xtrain['cluster'] = Xtrain['cluster'].cat.codes

# Xtest['cluster'] = Xtest['cluster'].astype('category')

# Xtest['cluster'] = Xtest['cluster'].cat.codes

# test_df['cluster'] = test_df['cluster'].astype('category')

# test_df['cluster'] = test_df['cluster'].cat.codes

Xtrain.shape, _test.shape

#gridsearch - hyperparameter tuning

from sklearn import metrics

from catboost import CatBoostRegressor as CAT

from lightgbm import LGBMRegressor as LGBM

from xgboost import XGBRegressor as XGB

from sklearn.model_selection import GridSearchCV

cat = CAT(loss_function='RMSE', random_state=seed)

#l2_leaf_reg: coeff at the L2 regularization

clf = GridSearchCV(cat, param_grid={

'max_depth': [4, 5, 7, 9],

'learning_rate': [0.025, 0.035, 0.05, 0.1],

'n_estimators': [400, 1500],

'l2_leaf_reg': [0.05, 0.5, 1, 5]

}, cv=5, n_jobs=-1, scoring='neg_root_mean_squared_error', verbose=0)

clf.fit(Xtrain, ytrain)

clf.best_estimator_, clf.best_score_, clf.best_params_ #0.09355667{'l2_leaf_reg': 1, 'learning_rate': 0.1, 'max_depth': 4, 'n_estimators': 400})

list(sorted(zip(clf.best_estimator_.feature_importances_, cols_transformed_names), reverse=True))

clf.best_params_.update({'random_state':seed}) #used: {'l2_leaf_reg': 0.5,'learning_rate': 0.05,'max_depth': 5, 'n_estimators': 400, 'random_state': 42}

clf.best_params_

from sklearn.ensemble import GradientBoostingRegressor

boost = GradientBoostingRegressor(loss='squared_error', random_state=seed)

clf_boost = GridSearchCV(boost, param_grid={

'max_depth': [3, 5, 7],

'learning_rate': [0.05, 0.1],

'alpha': [0.05, 0.5, 0.7],

'max_features': [8,30,55, 60]

}, cv=5, n_jobs=-1, scoring='neg_root_mean_squared_error', verbose=0)

clf_boost.fit(Xtrain, ytrain)

clf_boost.best_estimator_, clf_boost.best_score_, clf_boost.best_params_ #0.094

clf_boost.best_params_.update({'random_state': seed})

clf_boost.best_params_

list(sorted(zip(clf_boost.best_estimator_.feature_importances_, Xtrain.columns), reverse=True))

xgb = XGB(n_jobs=-1, random_state=seed)

cat = CAT(**clf.best_params_)

# rfc = RFC(n_jobs=-1, random_state=seed)

lgbm = LGBM(**clf.best_params_)

gbr = GradientBoostingRegressor(**clf_boost.best_params_)

print(Xtrain.shape, ytrain.shape)

print(Xtest.shape, ytest.shape)

print(_test.shape)

scores = []

test_preds = []

models_ = []

train_X = Xtrain

test_X = Xtest

test_ = test_df

for model in [cat, lgbm, xgb, gbr]:

if model == xgb:

model.fit(train_X, ytrain, eval_set=[(test_X, ytest)])

if model in [cat, lgbm]:

model.fit(train_X, ytrain, eval_set=(test_X, ytest))

else:

model.fit(train_X, ytrain)

train_pred = model.predict(test_X)

scores.append([model.__class__.__name__, metrics.mean_squared_error(ytest, train_pred, squared=False)])

test_preds.append(model.predict(test_))

models_.append(model)

scores, test_preds #0.09152509120742014

list(zip(train_X.columns,models_[0].feature_importances_)), list(zip(train_X.columns, models_[2].feature_importances_))

from sklearn.linear_model import Ridge

ridge = Ridge(random_state=seed, max_iter=700)

ridge.fit(train_X, ytrain)

c = ridge.predict(test_)

v = np.expm1(c)*0.5 + np.expm1(test_preds[0])*0.3 + np.expm1(test_preds[1]) * 0.2

v

# array([2250188.09538975, 1021480.99448109, 1250830.58885351, ...,

# 2015230.89372306, 1346352.30086387, 3334390.27243935])

from sklearn.linear_model import HuberRegressor

g = GridSearchCV(HuberRegressor(),param_grid={

'alpha': [0.003, 0.03, 0.3, 1, 10, 100],

'max_iter': [300, 500, 700, 1000],

'tol': [0.0001, 0.001, 1, 0.1, 0.0005]

}, cv=5, n_jobs=-1, scoring='neg_root_mean_squared_error', verbose=1)

g.fit(Xtrain, ytrain)

g.best_estimator_, g.best_score_, g.best_params_ #0.094

rg = HuberRegressor(**{'alpha': 0.3, 'max_iter': 300, 'tol': 1})

rg.fit(Xtrain, ytrain)

f = rg.predict(test_df)

f = np.expm1(f)

f

k =f*0.6 + np.expm1(test_preds[0])*0.4

k

f*0.4 + np.expm1(c)*0.25 + np.expm1(test_preds[0])*0.35

ID = pd.read_csv('Sample_submission.csv')['ID']

submission_cat = pd.DataFrame({

'ID': ID,

'price': np.expm1(test_preds[0])

})

submission_rcl = pd.DataFrame({

'ID': ID,

'price':v

})

submission_hc = pd.DataFrame({

'ID': ID,

'price': k

})

submission_hrc = pd.DataFrame({

'ID': ID,

'price': f*0.4 + np.expm1(c)*0.25 + np.expm1(test_preds[0])*0.35

})

submission_cat.head(3)

submission_rcl.head(3)

submission_hc.head(3)

submission_hrc.head(3)

# submission.to_csv('meh.csv', index=False)

# submission_c.to_csv('combo.csv', index=False)

submission_cat.to_csv('CAT_submission.csv', index=False)

submission_rcl.to_csv('RCL_submission.csv', index=False)

submission_hc.to_csv('HC_submission.csv', index=False)

submission_hrc.to_csv('HRC_submission.csv', index=False)

#save model

import joblib

joblib.dump(cat, 'catt.joblib')

joblib.dump(lgbm, 'lgbmm.joblib')

joblib.dump(ridge, 'ridge.joblib')

joblib.dump(rg, 'huber.joblib')

#save processed csv

Xtrain.to_csv('processed_xtrainx.csv', index=False)

Xtest.to_csv('processed_xtestx.csv', index=False)

test_df.to_csv('processed_testt.csv', index=False)

The gratification of being top 3% on the leadership can only last for so long - the big(ger) deal is staying in the top 3% after the shakedown, or maybe top place?