Covid-19 Forecasting (NG)

Hello there, I've been gone for a long time, but you know, not like it was noticeable or anything. cries in Eigenvectors. Well, if you must know the reason for my being gone for such a long time then look no further than work, school, work, sleep, anime, sleep and just being generally lazy. Anyhow, 2020, I mean, right?

Moving on...

TL;DR¶

The models show large daily confirmed cases and suggest that easing the lockdown would not be such a good idea at this point, even daily reported cases show that.

Aim¶

With the established mathematical models in this post, I try to forecast the spread of the covid-19 virus over the next 12 days in Nigeria on a national level. It should give a sense of what is to come but do not take it wholeheartedly as a shortage of resources is a big factor in making the models. The plan initially was to make a model to predict on a state level from scraped data, but the downtimes and layout changes on NCDC's site ruined that plan making already scraped data inept for this task.

Limitation¶

In order to make reliable models for cases like this, modelers rely heavily on historic data from prior outbreaks which can help with insights and boosting metrics. There aren't any (beneficial) historic data in this case, and researchers know very little about the disease to consider as predictors for modeling. No actual telling how long the virus can live on everyday objects. No sure effect of weather conditions being a factor in the spread.

And as such the data is limited. Hence, overfitting is inevitable. Also, there's the questionable quality of the collected data due to human and machine error, especially with things being rushed.

There are also the pattern irregularities associated with pandemics, such as this and making pattern finding a little bit strenuous, and since the model is on a national level, external data that could have proven useful are not included; isolation and testing centers, population density, covid-19 aids by states.

Assumption¶

The model assumes the travel ban as announced by the Federal Government of Nigeria was enforced on the stipulated date, and equally works with the view of a subset of the public concerning the proposed lockdown and social distancing: Relaxed, Strict, and Not enforced. It also projects that said interventions will remain in place for future dates in which our testing falls.

In addition, the model (rightly) assumes that I'm not an Epidemiologist(check out SEIR models here) and that, in fact, I'm only a brooding Data Scientist.

Method¶

Two standalone nonlinear models and an ensemble after fitting reported confirmed cases and some derived features.

Let's proceed!

#Import packages/tools

%matplotlib inline

import matplotlib.pyplot as plt

import pandas as pd

import numpy as np

import seaborn as sns

import plotly.express as px

from sklearn.preprocessing import StandardScaler

# from sklearn.base import BaseEstimator, TransformerMixin

# from sklearn.pipeline import Parallel, Pipeline, FeatureUnion

from catboost import CatBoostRegressor

from lightgbm import LGBMRegressor

from xgboost import XGBRegressor

from sklearn.ensemble import RandomForestRegressor

from sklearn.model_selection import KFold

from sklearn.metrics import mean_squared_error as mse

from IPython.core.display import HTML

import warnings

warnings.filterwarnings('ignore')

sns.set(style='white', font_scale=1.2, rc={"figure.figsize": (15,10)})

colors_ = {

'background': '#111111',

'text': '#BEBEBE',

'grid': '#333333',

'red': '#BF0000'

}

tickFont = {

'size':12,

'color':"rgb(30,30,30)",

'family':"Courier New, monospace"}

SEED = 9

RANDOM_STATE = 5

# from plotly.offline import init_notebook_mode, plot,iplot

# import cufflinks

# init_notebook_mode(connected=True)

# cufflinks.go_offline()

# import chart_studio.plotly as py

# pd.set_option("max_rows", 20)

Next line: Data containing the cumulated confirmed cases(as well as Recovery and Deaths) daily, country and the data is loaded. I had to drop columns I won't be using such as Province/State, Cumulated Deaths and Recovery and the date is parsed as datetime.

Data Source: JohnsHopkinsUni

# data = pd.read_csv("~/NG_covid19_ML/data/allData.csv")\

# .drop(["Province/State"], axis=1)\

# .astype({'date':'datetime64[ns]'})

# # data.head(3)

#rename Country/Region to just Country

data.rename(columns={'Country/Region':'Country'}, inplace=True)

data.isna().sum() #No null values. Good!

Let's dedicate a couple of lines to making graphs: worldwide and then Africa.

countries_to_plot = ["US", "Italy", "China", "Germany", "Ghana", "Morocco", "Nigeria", "South Africa", "Tunisia"]

country_group = data.groupby(['date', 'Country'], as_index=False)['CumConfirmed'].sum()

xc = country_group[country_group["Country"].isin(countries_to_plot)]

trend_fig = px.line(xc, x="date", y="CumConfirmed", color="Country").for_each_trace\

(lambda rmvequalsign: rmvequalsign.update(name=rmvequalsign.name.split("=")[-1]))

trend_fig.update_traces(

line=dict(width=3),

selector=dict(type="scatter", mode="lines")

)

trend_fig.update_layout({'legend_orientation':'h', 'autosize':True, 'margin':dict(l=0.5,r=0.5,b=2,t=3)}, font=tickFont)\

.update_xaxes(title="", tickfont=tickFont)\

.update_yaxes(title="Cumulated Confirmed Cases")

#african countries have lower cases so it's hard sighting them on the graph\

#(thousands compared to a million)

countries_to_plot = ["Ghana", "Morocco", "Nigeria", "South Africa", "Tunisia", "Kenya"] #6 african countries

country_group = data.groupby(['date', 'Country'], as_index=False)['CumConfirmed'].sum()

xc = country_group[country_group["Country"].isin(countries_to_plot)]

trend_fig = px.line(xc, x="date", y="CumConfirmed", color="Country").for_each_trace\

(lambda rmvequalsign: rmvequalsign.update(name=rmvequalsign.name.split("=")[-1]))

trend_fig.update_traces(

line=dict(width=3),

selector=dict(type="scatter", mode="lines")

)

trend_fig.update_layout({'legend_orientation':'h', 'autosize':True, 'margin':dict(l=0.5,r=0.5,b=2,t=3)}, font=tickFont)\

.update_xaxes(title="", tickfont=tickFont)\

.update_yaxes(title="Cumulated Confirmed Cases")

#I shall be extracting data for Nigeria only

NG_data = data[data["Country"] == "Nigeria"]

NG_data.reset_index(drop=True, inplace=True)

#peep into the data and check last accounted date

NG_data.tail() #19th, May 2020

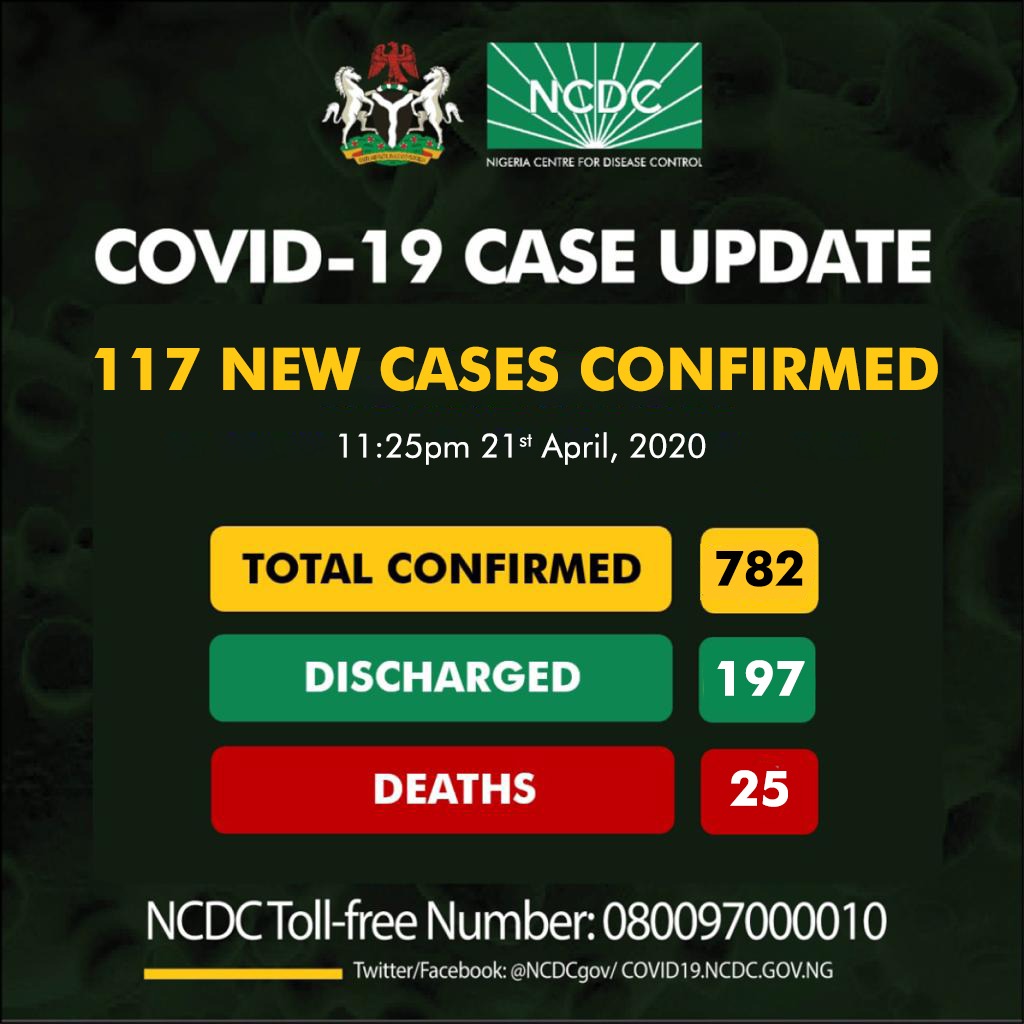

Going through the data I noticed that the 20th and 21st of April had the same value of 665, which would indicate that there was no case on the 21st but there was. One of the highest cases ever recorded: 117. I checked the NCDC's site to confirm and made the required changes to that index. Also, the first case in Nigeria wasn't till the 28th of February so I had to tailor the data starting from the 27th of February, just to get rid of that long list of zeros.

Let's investigate together!

NG_data[NG_data["CumConfirmed"] > 0].head(12) #easily identify first reported case - 28th.

#No further case until the 9th of March. Take note of the index (37, so tailor from 36)

NG_data = NG_data.iloc[36:, :] #Tailored!

NG_data.reset_index(drop=True, inplace=True)

NG_data.head()

NG_data.iloc[50:60] #note index 53 and 54

fig = px.line(NG_data, x='date', y='CumDeaths', title="Cumulated Deaths by date")

fig.update_layout({

'paper_bgcolor': '#f2f2f2'

})

fig.update_traces(line_color="red")

fig = px.line(NG_data, x='date', y='CumRecovered', title="cumulated recovery by date")

fig.update_layout({

'paper_bgcolor': '#f2f2f2'

})

fig.update_traces(line_color="green")

fig = px.line(NG_data, x='date', y='CumConfirmed', title="cumulated confirmed cases")

fig.update_layout({

'paper_bgcolor': '#f2f2f2'

})

fig.update_traces(line_color="blue")

Could you tell that flat curve just after 'Apr 19,' yeah? That's the culprit; 665 both on the 20th and 21st, while this could be a good thing meaning Nigeria had no new case on the 21st, we know that's false. In fact, we had 117 new cases on the 21st, summing it to 782. Hence, (20th) 665 - (21st) 782 - (22nd) 873 and so forth.

Also, NCDC's correction for the 18th of April: 541 instead of 542. Index 51.

Proof has been included below and update is to be made.

#index 54, column CumConfirmed, replace 665 with 782

NG_data.at[54, 'CumConfirmed'] = 782

NG_data.iloc[53:56, :] #perfection!

Notice how I did not update the values for Cumulative Death and Recovered, as this won't be used in the model.

NG_data.at[51, 'CumConfirmed'] = 541

NG_data.iloc[50:53, :]

#re-draw.

fig = px.line(NG_data, x='date', y='CumConfirmed')

fig.update_layout({

'paper_bgcolor': '#f2f2f2'

})

fig.update_traces(line_color="blue")

Now, let's use dataFrame.diff() on the CumConfirmed Column so we can get the daily cases from it, convert possible NaNs to zeros, which should be only one at the very top(0 - 'no value' = NaN)

NG_data["DailyConfirmed"] = NG_data["CumConfirmed"].diff()

NG_data.head()

NG_data.fillna(axis=1, value=0, inplace=True)

NG_data.head(5)

The below graph shows that daily cases are rising fast. Noting that the virus can have an incubated window of 14 days, it's telling that the quarantine is not quite effective.

#cases on a daily basis

fig = px.line(NG_data, x='date', y='DailyConfirmed')

fig.update_layout({

'paper_bgcolor': '#f2f2f2'

})

fig.update_traces(line_color="purple")

#drop recovery and death cases

NG_data.drop(["Country", "CumRecovered", "CumDeaths"], axis=1, inplace=True)

Now, let's slip in data for our testing. I want to make predictions for the next 8 days, starting on the 28th, and then merge with our data on the row level.

post_date = ['2020-05-20','2020-05-21', '2020-05-22', '2020-05-23', '2020-05-24', '2020-05-25', '2020-05-26','2020-05-27', '2020-05-28','2020-05-29','2020-05-30','2020-05-31']

post_incident = pd.DataFrame(post_date, columns=["date"])

post_incident["date"] = post_incident['date'].astype('datetime64[ns]')

post_incident #the next 12 days

covid19_NG = pd.concat([NG_data, post_incident], sort=False, axis=0)

covid19_NG.reset_index(drop=True, inplace=True)

covid19_NG.tail(15)

covid19_NG["day"] = covid19_NG.date.dt.strftime("%m%d").astype('int')

covid19_NG.tail(20)

I checked around for news that could be of value in terms of engineering features.

21-03-2020: NG bans entry of travelers from 13 countries(incl. US, Uk, Italy, France and China) [ng.usembassy.gov] Ban to last for 4 weeks.

23-03-2020: Closure of land borders [vanguard]. Same with schools.

28-03-2020: GTB hands over 110-bed isolation centre to Lagos State Gov.

08-04-2020: 110-bed isolation centre by GTB begins operation. [Allafrica]('https://allafrica.com']

31-03-2020: Lockdown as announced by Pres. Buhari (Starts 11:30PM on the 30th, so next day then)

14-04-2020: Another lockdown starts. 04-03-2020: No single isolation centre in Abuja says Senate President. [Punch.ng] 27-01-2020: First Nigeria case. NCDC

21-02-2020: covid19 training starts ncdc.gov.ng

update Lockdown lifted on the 4th of May, 2020. Travel Ban still in place.

def assumed_lkdown(val):

if val <= 330:

return "Not Enforced"

if val >= 331 and val <= 402: #first three days after proposed lockdown

return "Relaxed"

elif val >= 403 and val <=410: #police mobilization and lockdown security goes up

return "Tight"

elif val >= 504:

return "Lifted"

else:

return "Relaxed"

#Lagos, Abuja, and Kano hold high cases and the assumed lockdown was modelled from them.

covid19_NG["Lockdown"] = covid19_NG["day"].apply(assumed_lkdown)

covid19_NG[covid19_NG["Lockdown"] == "Lifted"].head()

covid19_NG["Travel Ban"] = covid19_NG["day"].apply(lambda x: "Enforced" if x >= 321 else "Not Enforced")

covid19_NG[covid19_NG["Travel Ban"] == "Enforced"].head(3)

#321 - 21st of March, when ban became effective.

covid19_NG["Border closure"] = covid19_NG["day"].apply(lambda x: "Enforced" if x >= 323 else "Not Enforced")

covid19_NG[covid19_NG["Border closure"] == "Enforced"].head(3)

covid19_NG["dayName"] = covid19_NG.date.dt.day_name()

covid19_NG.head()

sns.barplot(data=covid19_NG, x="dayName", y="DailyConfirmed")

print("Highest reported cases in Nigeria: ", covid19_NG["DailyConfirmed"].max())

covid19_NG[covid19_NG["DailyConfirmed"] == covid19_NG["DailyConfirmed"].max()]

covid19_NG.tail(11)

covid19_NG.drop(["date"], axis=1, inplace=True) #drop date

#Log transform DailyConfirmed. NaNs won't be transformed

covid19_NG["DailyConfirmed"] = np.log1p(covid19_NG["DailyConfirmed"])

covid19_NG = pd.get_dummies(covid19_NG, drop_first=True) #get dummies

covid19_NG.head()

Let's split the data into train, validation and testing.

train = covid19_NG[covid19_NG["day"] <= 514] #Train on this

val = covid19_NG[(covid19_NG["day"] >= 515) & (covid19_NG["day"] <= 519)] #evaluate on this

test = covid19_NG[covid19_NG["day"] >= 520] #test on this

print("Original data shape: ", covid19_NG.shape, "\nTrain shape: ", train.shape, "\nValidation data shape: ",val.shape, "\nTest data shape: ",test.shape)

#Predict for the rest of the month starting from May 20th, 2020.

Get ready for TRAINING and TESTING!

Xtrain = train.drop(["CumConfirmed", "DailyConfirmed"], axis=1)

ytrain = train["DailyConfirmed"]

Xval = val.drop(["CumConfirmed", "DailyConfirmed"], axis=1)

yval = val["DailyConfirmed"]

Xtest = test.drop(["CumConfirmed", "DailyConfirmed"], axis=1)

Xtrain.head(3)

from sklearn.preprocessing import MinMaxScaler

ss = StandardScaler()

mm = MinMaxScaler() #default=(0,1)

Xtrain_std = ss.fit_transform(Xtrain)

Xval_std = ss.transform(Xval)

Xtest_std = ss.transform(Xtest)

from sklearn.metrics import mean_squared_error as mse

cat_params = {

'num_boost_round':10000,

'learning_rate': 0.005,

'silent':True,

'eval_metric': 'RMSE',

'early_stopping_rounds':200,

'max_depth':9,

'use_best_model':True,

'random_state': RANDOM_STATE

}

cat_model = CatBoostRegressor(**cat_params)

rf_model = RandomForestRegressor(n_estimators=10000, random_state=RANDOM_STATE, max_depth=24)

#¶

%%time

cat_model.fit(Xtrain, ytrain, eval_set=[(Xtrain, ytrain)])

print("Best Score: ", cat_model.best_score_, "\nIteration stopped at: ", cat_model.best_iteration_)

train_pred_cat = cat_model.predict(Xtrain)

cat_rmse = np.sqrt(mse(train_pred_cat, np.expm1(ytrain)))

print("RMSE: ", cat_rmse)

val_pred = cat_model.predict(Xval)

print("Predicted values: ", list(np.round(np.expm1(val_pred), decimals=0)))

print("Actual values: ", list(np.round(np.expm1(yval), decimals=0)))

print("RMSE: ", np.sqrt(mse(val_pred, np.expm1(yval))))

test_pred_cat = cat_model.predict(Xtest)

test_pred_cat #log-transformed

# xg_model = XGBRegressor(n_estimators=20000, learning_rate=0.0005)

### Random Forest¶

%%time

rf_model.fit(Xtrain, ytrain)

train_pred_rf = rf_model.predict(Xtrain)

print("RMSE (TRAIN): ", np.sqrt(mse(train_pred_rf, np.expm1(ytrain))))

print("######")

#evaluation

val_pred_rf = rf_model.predict(Xval)

print("Predicted values: ", list(np.round(np.expm1(val_pred_rf), decimals=0)))

print("Actual values: ", list(np.round(np.expm1(yval), decimals=0)))

print("RMSE (EVALUATION): ", np.sqrt(mse(val_pred_rf, np.expm1(yval))))

#test prediction

test_pred_rf = rf_model.predict(Xtest)

# list(np.round(np.expm1(covid19_NG.DailyConfirmed[:-9]), decimals=0))

#Neural network modelling

from keras.wrappers.scikit_learn import KerasRegressor

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

def build_model():

model = keras.Sequential([

layers.Dense(32, activation='relu', input_shape=[Xtrain.shape[1]]),

layers.Dense(16, activation='relu'),

layers.Dense(1)

])

optimizer = tf.keras.optimizers.RMSprop(0.003)

model.compile(loss='mse',

optimizer=optimizer,

metrics=['mse'])

return model

model = build_model()

model.summary()

Xtrain_mm = mm.fit_transform(Xtrain)

# Xval_mm = mm.transform(Xval)

Xtest_mm = mm.transform(Xtest)

model.fit(Xtrain_mm, ytrain)

model.predict(Xtrain_mm)

from keras.callbacks import EarlyStopping#set early stopping monitor so the model stops training when it won't improve anymore

early_stopping_monitor = EarlyStopping(patience=3)

EPOCHS = 10000

history = model.fit(

Xtrain_mm, ytrain,

epochs=EPOCHS, validation_split = 0.2, verbose=0,

callbacks=[early_stopping_monitor]

)

hist = pd.DataFrame(history.history)

hist['epoch'] = history.epoch

hist.tail()

# test_predictions = model.predict(Xtest_mm).flatten()

# np.expm1(test_predictions)

#ensemble by weight

cat_pred = np.round(np.expm1(test_pred_cat), decimals=0)

rf_pred = np.round(np.expm1(test_pred_rf), decimals=0)

ensemble = 0.6*cat_pred + 0.4*rf_pred

test_predictions = pd.DataFrame({

"date": post_incident["date"],

"New Cases (CAT)": [int(x) for x in cat_pred],

"New Cases (Random Forest)": [int(x) for x in rf_pred],

"Ensemble": [int(x) for x in ensemble],

})

test_predictions

Overfitting was expected due to the data being constrained to a small size.

Overfitting was expected due to the data being constrained to a small size.

test_predictions.to_csv("submission.csv", index=False, header=True)

Disclaimer¶

The predicted values are not to be substituted for actual future cases. The table is merely a forecast, purely for learning purposes.

Resources¶

- Know more about the COVID-19 virus here.

- Ramadan advisory by NCDC here

- If you'd like to view a COVID-19 Dashboard, I made one for just African countries: click here to view the dashboard